Chapter 3

How algorithms diffuse and amplify misinformation

Lead authors: Victor Galaz, Stockholm Resilience Centre, and Stefan Daume, Stockholm Resilience Centre

The way information moves in digital social networks is not random. Photo: Urupong via Canva.

Digital platforms are designed to maximize engagement. Recommender systems play a key role as they shape digital social networks, and the flow of information. Weaknesses in their underlying algorithmic systems are already today being exploited to try to influence public opinion on climate and sustainability issues. Automation through social bots also play a role, although their impacts are contested.

In 2010, a team of political scientists in collaboration with researchers at Facebook conducted a gigantic experiment on the platform. It included “all users of at least 18 years of age in the United States who accessed the Facebook website on 2 November 2010, the day of the US congressional elections”. This 61-million-person experiment tested whether a simple tweak in the newsfeed of users – adding an “I Voted” button and information about friends who had voted – could impact voting behaviour.

The results were clear: this small design change led to higher real-world voting turnout. Facebook users who saw a message about one of their close friends on Facebook having voted were 2.08% more likely to vote themselves (Bond et al., 2012).

This controversial experiment illustrates a number of issues that are key if we are to understand how digital platforms interplay with the creation and diffusion of mis- and disinformation, and their impacts.

First, digitalization and the expansion of social media have not only fundamentally expanded the scale, but also transformed the properties of social networks. 3,6 billion people use social media today. Through it, they access a digital media environment where language barriers slowly but surely are eroding as new digital tools make communication across borders increasingly frictionless. These new connections are transforming the structures of social networks in ways that allow for immediate communication across vast geographical space (Bak-Coleman et al., 2021).

Second, the way information moves in these vastly spanning digital social networks is not random. Its movement is fundamentally affected by the way digital media platforms such as recommender systems are designed to maximize engagement and induce other forms of behavioural responses amongst its users (Kramer et al., 2014; Coviello et al., 2014). The diffusion of such information can also be exploited by external attempts to manipulate public opinion online. Three common approaches include (1) specifically targeting the way algorithmic systems operate, (2) carefully crafting viral messages to sow confusion, and (3) through the use of automation tools like ‘social bots’.

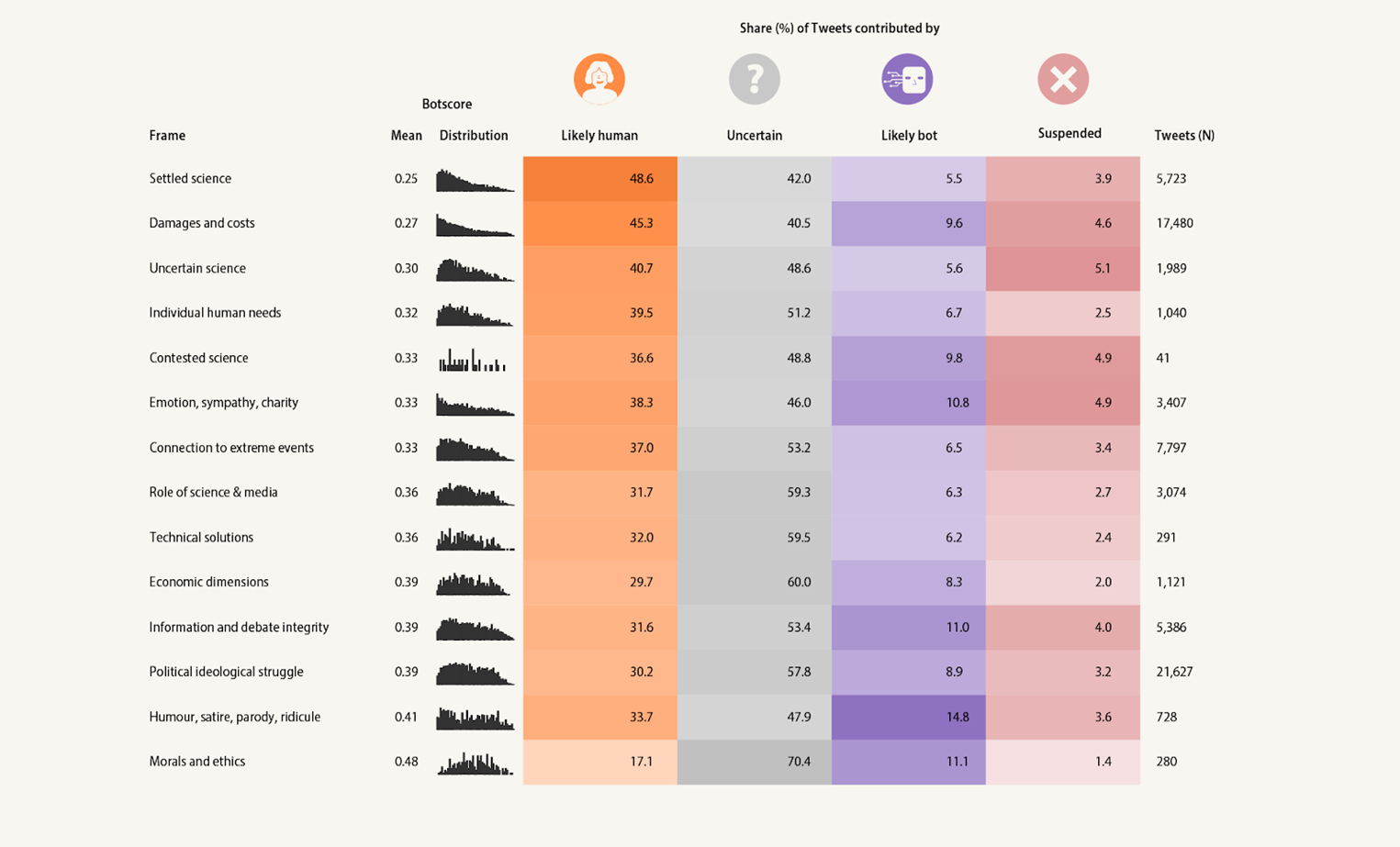

The figure illustrates how social bots influenced the diffusion of different climate change frames via Tweets about the 2019/2020 Australia bushfires. The results indicate that different framings of climate change are associated with distinctive automation signatures. Figure: Azote.

Social bots mimic human behaviour online, and can be used in ways to amplify certain types of information – say, climate denialism –, or operate in ways that widen social divisions online (Gorwa and Guilbeault, 2018; Shao et al., 2018). Our own work (Daume et al., 2023) shows that social bots play an unignorable role in climate change conversation. They amplify information that supports and opposes climate action at the same time, especially information appealing to emotions, such as sympathy or humour.

Recommender systems play a key role for the dynamics of information diffusion and the evolution of digital social networks (Narayanan, 2023), and they have increasingly become infused with deep learning-based AI (Engström and Strimling, 2020). People-recommender systems for example (like “People You May Know” on Facebook or “Who to Follow” on Twitter) shape social network structures, thus influencing the information and the opinions a user is exposed to online (Cinus, 2021). Content-recommender systems (like “Trends for you”) can reinforce the human preference for content that aligns with a user’s ideology (Bakshy et al., 2015).

The figure below illustrates four highly simplified models of information propagation of one individual post. The expansion of digital social networks, the influence of recommender systems and social bots change the reach of mis- and disinformation. This diffusion has complex secondary effects on perceptions, on the formation of online communities, on collective action, and on identity formation.

The figure illustrates four models of information propagation of one individual post through: a) network, b) algorithm, c) affective, and d) automated amplification. In a) the post cascades through the network as long as other users choose to further propagate it by e.g., sharing or liking. In the algorithmic model b) propagation unfolds as users with similar interests (as determined by recommendation algorithms based on for example past engagement) are more likely to be recommended the post. In (c), users comment and reshare posts much more if they elicit strong emotions, and recommender systems pick up highly engaging posts and amplify them even further. In d) automated accounts (‘social bots’) purposefully share content that elicits strong emotions to further increase propagation. Figure based on (Narayanan, 2023), design by Azote.

Lastly, the 61-million-user experiment also illustrates another feature of today’s digital ecosystem – the capacity to mass produce and test online material to maximize impact. Online material and digital platforms provide the perfect setting to conduct randomized controlled experiments as a means to systematically compare two versions of something – say, a news article or ad – to figure out which performs better, sometimes referred to as A/B testing. In combination with a growing capacity to cheaply and quickly mass produce synthetic text, images and videos using generative AI (see chapter 6), this is pushing us into uncharted and dangerous territory for the future of mis- and disinformation on climate and environmental sustainability issues.

References

Bak-Coleman, J. B., Alfano, M., Barfuss, W., Bergstrom, C. T., Centeno, M. A., Couzin, I. D., ... & Weber, E. U. (2021). Stewardship of global collective behavior. Proceedings of the National Academy of Sciences, 118(27), e2025764118.

Bakshy, E., Messing, S., & Adamic, L. A. (2015). Exposure to ideologically diverse news and opinion on facebook. Science, 348(6239), 1130– 1132. https://doi.org/10.1126/science.aaa1160

Bond, R. M., Fariss, C. J., Jones, J. J., Kramer, A. D. I., Marlow, C., Settle, J. E., & Fowler, J. H. (2012). A 61-million-person experiment in social influence and political mobilization. Nature, 489(7415), 295–298.

Cinus, Federico, Marco Minici, Corrado Monti, and Francesco Bonchi. 2021. The Effect of People Recommenders on Echo Chambers and Polarization. arXiv.org. https://arxiv.org/abs/2112.00626

Coviello, L., Sohn, Y., Kramer, A. D. I., Marlow, C., Franceschetti, M., Christakis, N. A., & Fowler, J. H. (2014). Detecting emotional contagion in massive social networks (R. Lambiotte, Ed.). PLoS ONE, 9(3), e90315. https://doi.org/10.1371/journal.pone.0090315

Daume, S., Galaz, V. & Bjersér, P. (2023) Automated Framing of Climate Change? The Role of Social Bots in the Twitter Climate Change Discourse During the 2019/2020 Australia Bushfires. Social Media + Society 9(2). https://doi.org/10.1177/20563051231168370

Engström, E., & Strimling, P. (2020). Deep learning diffusion by infusion into preexisting technologies – implications for users and society at large. Technology in Society, 63, 101396. https://doi.org/10.1016/j.techsoc.2020.101396

Gorwa, R., & Guilbeault, D. (2018). Unpacking the social media bot: A typology to guide research and policy. Policy & Internet, 12 (2), 225– 248. https://doi.org/10.1002/poi3.184

Kramer, A. D. I., Guillory, J. E., & Hancock, J. T. (2014). Experimental evidence of massive-scale emotional contagion through social net- works. Proceedings of the National Academy of Sciences, 111(24), 8788–8790. https://doi.org/10.1073/pnas.1320040111

Narayanan, A. (2023). Understanding social media recommendation algorithms. The Knight First Amendment Institute. Retrieved April 19, 2023, from https://knightcolumbia.org/content/understanding-social-media-recommendation-algorithms

Shao, C., Ciampaglia, G. L., Varol, O., Yang, K.-C., Flammini, A., & Menczer, F. (2018). The spread of low-credibility content by social bots. Nature Communications, 9(1). https://doi.org/10.1038/s41467-018-06 930-7

About the authors

Victor Galaz is an associate professor in political science at Stockholm Resilience Centre at Stockholm University. He is also programme director of the Beijer Institute’s Governance, Technology and Complexity programme. His research includes, among others, societal challenges created by technological change.

He is currently working on the book “Dark Machines” (for Routledge) about the impacts of artificial intelligence, digitalization and automation for the Biosphere.

Stefan Daume is a post-doctoral researcher at Stockholm Resilience Centre at Stockholm University. His research explores connections between digital technologies and sustainability, with particular focus on the promises and risks of social media for public engagement with environmental challenges.

Key terms

Are you unsure what a specific term means? Check our list of key terms.

Explore all chapters

Introduction: AI could create a perfect storm of climate misinformation

Chapter 1: What is climate mis- and disinformation, and why should we care?

Chapter 2: The neuroscience of false beliefs

Chapter 3: How algorithms diffuse and amplify misinformation

Chapter 4: Emotions and group dynamics around misinformation on social media

Chapter 5: When health and climate misinformation overlap

Chapter 6: A game changer for misinformation: The rise of generative AI

Chapter 7: Keeping up with a fast-moving digital environment